What is the difference between a MegaByte (MB) and a MebiByte (MiB)?

If you were taught that 1 MB = 1024 KB, you were thaught wrong. 1 MB actually equals 1000 KB, while 1 MiB = 1024 KiB. The mebi prefix in MebiByte (MiB) stands for mega and binary – which refers to it as being a power of 2 – thus the values such as 32, 64, 128, 256, 512, 1024, 2048 and so on.

The megabyte (MB) on the other hand is always a power of 10, so you’ve got 1 KB = 1000 bytes, 1 MB = 1000 KB and 1 GB = 1000 MB.

Diferences between Operating Systems

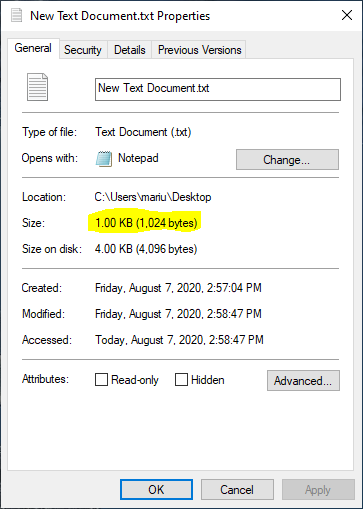

Almost each operating system deals with these units differently and out of all, Windows is the most weird. It actually calculates everything in mebibytes but then adds a KB/MB/GB at the end, basically saying it’s a megabyte. So a 1024 byte file will be reported as 1.00 KB, while in reality it is 1.00 KiB or 1.024 KB.

You can test this yourself by creating a TXT file with 1000 characters in it (1 character = 1 byte), and then inspecting the file info.

This kind of reporting leads to all kind of confusions and users often feeling ripped off when they buy a 256 GB hard-drive, only to have it reported by Windows as 238 GB (when what they mean is 238 GiB, which equals 256 GB).

Other Operating systems to use this power of 10 definition include macOS, iOS, Ubuntu and Debian. This way of measuring memory is also consistent with the other uses of the SI prefixes in computing, such as CPU clock speeds or measures of performance.

Note: macOS measured memory in powers of 2 units prior to Mac OS X 10.6 Snow Leopard when they switched to units based on powers of 10. The same applies to iOS 10.

Dealing with conflicting definitions

The mebibyte was designed to replace the megabyte as it conflicted with the definition of the prefix mega in the International System of Units (SI). But despite being established by the International Electrotechnical Commission (IEC) in 1998 and accepted by all major standards organizations, it is not widely acknowledged within the industry or media.

The IEC prefixes are part of the International System of Quantities – and IEC has further specified that the kilobyte should only be used to refer to 1000 bytes. This is the current modern standard definition for the kilobyte.

Comparison of decimal and binary units

In the end, I leave you with a table containing all the different names of the different units of measures, multiples of bytes. One thing to note here is that the ronna- and quetta- prefixes where adopted recently – in 2022 – by the International Bureau of Weights and Measures (BIPM), but only for the powers of 10 unit. The binary counterparts were given in a consultation paper but they have not been adopet yet by either IEC or ISO.

| Decimal | Binary | |||

| Value | Metric | Value | IEC | Memory |

| 1 | B byte | 1 | B byte | B byte |

| 1000 | kB kilobyte | 1024 | KiB kibibyte | kB kilobyte |

| 10002 | MB megabyte | 10242 | MiB mebibyte | MB megabyte |

| 10003 | GB gigabyte | 10243 | GiB gibibyte | GB gigabyte |

| 10004 | TB terabyte | 10244 | TiB tebibyte | TB terabyte |

| 10005 | PB petabyte | 10245 | PiB pebibyte | |

| 10006 | EB exabyte | 10246 | EiB exbibyte | |

| 10007 | ZB zettabyte | 10247 | ZiB zebibyte | |

| 10008 | YB yottabyte | 10248 | YiB yobibyte | |

| 10009 | RB ronnabyte | |||

| 100010 | QB quettabyte |

You might want to change the typo since the purpose of this page is to help clear up confusion regarding these terms. Saying that 1 KB = 100 bytes will just create unnecessary confusion due to a missing zero, since one KB = 1000 and not 100. Thanks for the useful explanation nonetheless.

So Microsoft engineers were taught wrong, and nobody corrected them in 35 years. Is that what you’re implying?

The decimal prefix “mega-” was introduced in resolution 12 of the 11th CGPM (1960), and it applied to any unit within the scope of that document. Byte is neither a SI unit, nor SI-derived unit. Thus, `M=10^6` definition from SI does not need to apply to it.

Prefix “mega-” does not always mean “a million”. It can mean different things in different contexts. One “megaproject” isn’t equal to million projects (and one “kitten” isn’t equal to thousand “ittens”). If you ever see kilofeet or centipounds being thrown around, you’d be wise to check exactly what prefix means in case by case basis. Why? Because they are not SI units.

Computers operate with bits. Transistor has two states, not 10. Information and entropy are defined in bits (there’s log2 in there, not log10). Thus, in computer science decimal SI prefixes are not used in relation to bits or bytes. Reason is pretty straightforward: 1000 is not a round number. If you have 10 bits to address a memory buffer, its size will be 1024 cells. No one is going to call it 1000 and have 24 left over.

So what exactly does “megabyte” mean? Commonly cited source for this is JESD100B.01, which defines mega (M) as a prefix to units of semiconductor storage capacity to be a multiplier of 1,048,576 (2^20 or K^2, where K = 1024), while acknowledging its ambiguity.

In other words, this is current status quo:

– `MiB = 1,048,576 B` – recommended usage

– `MB = 1,048,576 B` – acceptable usage

– `MB = 1,000,000 B` – plain wrong

As for now, decimal megabyte (metric megabyte if you will) is only used by hard disks manufacturers acting in bad faith. I’d much rather prefer not seeing it anywhere else.

Hahaha

Taught wrong?

This change occurred when the Europeans (SI) decided to create this new standard. Where base 2 sizes are now based on base 10 sizes (which makes no sense). See ISO 1000:1992/AMD 1:1998.

So prior, 1024 bytes was universally accepted as a MB. Microsoft brought the changes over in the early 2000’s, and UNIX never did.

This means YOU were taught wrong, and the Europeans just wanted to be different, or conform to the metric system (base 10), which doesn’t apply to units of computing.

This change cause havoc for consumers purchasing RAM, hard drives and other peripherals.